Slide talk: Demystifying GPT-3

November 09, 2020 -The transformer

For another meeting of our reinforcement/machine learning reading group, I gave a talk on the underlying model of GPT-2 and GPT-3, the ‘Transformer’. There are two main concepts I wanted to explain: positional encoding and attention.

During the talk, I found that two things were most confusing. The first is the definition of positional encoding. Indeed, it's just a deterministic vector added to each word, based on its position. The second is the way attention is visualised as a heatmap with the input sentence on both axes. This I found quite puzzling at first, and in many places it was not really explained properly. Below, I will clarify these two things. For the slides, which can be used as an introduction to this post, see here. Many references to other introductory sources can also be found there.

Positional encoding

Positional encoding makes up for the lack of ‘sequentialness’ of the input data by placing an encoded sequence of words (each word being a vector in ) on a kind of spiral staircase in . The -th step of the staircase is the vector where . These steps are simply added to corresponding word in the input sequence. Since the added values are nicely bounded, they are harmless to add to the embedding layer. But the interesting property they have is that going up the staircase by a fixed number of steps is a linear operation. That is, there is a linear transformation , such that for every : A proof can be found here. According to the original paper, this allows the model 'to easily learn to attend by relative positions'.

Attention

The most important ingredient of the transformer are attention layers. For simplicity, I restrict to the self-attention layers in the encoding part of the transformer here. These precede the feed-forward layers in the encoder. They are supposed to equip an input sentence with a representation of ‘context’. In a single attention head, this context is a linearly transformed representation of the input sequence, weighted by attention.

Self-attention is learned by the model in the following way. An input word is transformed by a linear network layer into a key and a corresponding value:

On top of this, each word may be transformed into a query, which has the same dimension as a key. Any query can act on any key by taking the inner product. Given a query , the attention applied to the value of a key is given by a softmaxed scaled dot-product: In the model, the attention to a value is computed as the sum of the attention over all queries generated from the input sequence. So what the attention head does is multiply the values with an attention matrix: where the rows of are all queries, keys and values computed from the input sequence.

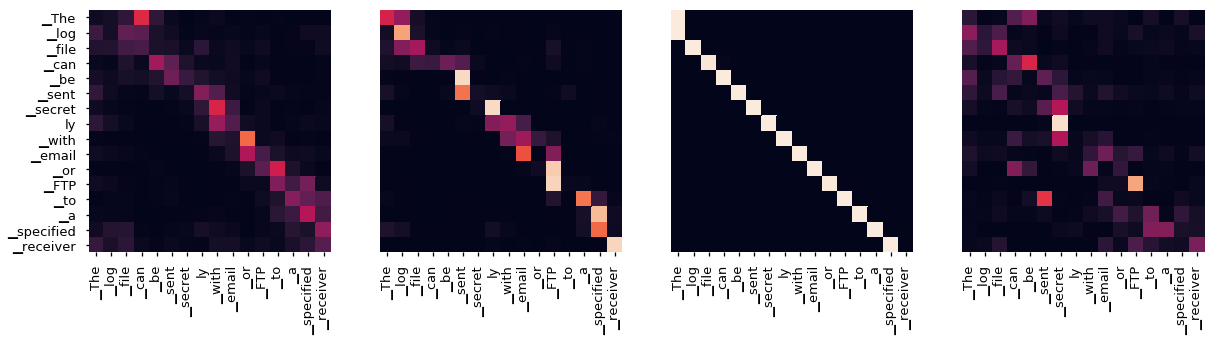

This attention matrix can be visualised. Since every pair of words determines a query and a key, it computes some attention. This can be visualised in a heatmap, where the axes are labelled by the input sentence. An example can be found in the The Annotated Transformer by Harvard NLP:

The picture displays the attention weights in the first four self-attention heads in the second encoder layer. But note that the labels on the axes do not really indicate the words themselves. Rather, they are learned linear transformations of words. The vertical axis contains the queries, and the horizontal axis contains the keys. Interestingly, the third head seems to have learned to turn words into queries that, given the input keys, instruct to pay attention to the value corresponding to the word preceding the word corresponding to the query. Learning this type of shifts in position is exactly what positional encoding is supposed to enable!